Art Existential Risk the Anthropic Principle Human Enhancement Ethics Superintelligence Risks

A global catastrophic risk or a doomsday scenario is a hypothetical time to come effect that could harm human being well-being on a global scale,[two] fifty-fifty endangering or destroying modern civilization.[3] An effect that could cause human extinction or permanently and drastically curtail humanity's potential is known as an "existential risk."[4]

Over the final ii decades, a number of bookish and non-profit organizations have been established to research global catastrophic and existential risks, codify potential mitigation measures and either advocate for or implement these measures.[5] [half-dozen] [vii] [8]

Definition and nomenclature [edit]

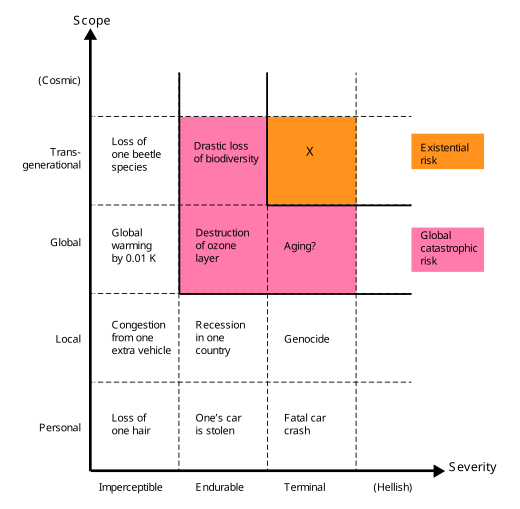

Telescopic/intensity grid from Bostrom's paper "Existential Risk Prevention every bit Global Priority"[9]

Defining global catastrophic risks [edit]

The term global catastrophic risk "lacks a precipitous definition", and by and large refers (loosely) to a risk that could inflict "serious damage to human being well-beingness on a global scale".[10]

Humanity has suffered big catastrophes before. Some of these have caused serious harm simply were just local in scope—due east.g. the Black Expiry may take resulted in the deaths of a 3rd of Europe's population,[11] ten% of the global population at the time.[12] Some were global, but were not as astringent—eastward.thousand. the 1918 flu pandemic killed an estimated 3-half-dozen% of the world's population.[thirteen] Most global catastrophic risks would not be so intense equally to kill the bulk of life on earth, only even if one did, the ecosystem and humanity would eventually recover (in contrast to existential risks).

Similarly, in Catastrophe: Adventure and Response, Richard Posner singles out and groups together events that bring about "utter overthrow or ruin" on a global, rather than a "local or regional", scale. Posner highlights such events as worthy of special attention on cost–do good grounds because they could direct or indirectly jeopardize the survival of the human race every bit a whole.[fourteen]

Defining existential risks [edit]

Existential risks are defined as "risks that threaten the destruction of humanity's long-term potential."[15] The instantiation of an existential take chances (an existential catastrophe[16] ) would either cause outright human extinction or irreversibly lock in a drastically junior situation.[9] [17] Existential risks are a sub-grade of global catastrophic risks, where the damage is not but global but also terminal and permanent, preventing recovery and thereby affecting both current and all hereafter generations.[9]

Non-extinction risks [edit]

While extinction is the nigh obvious way in which humanity's long-term potential could be destroyed, at that place are others, including unrecoverable plummet and unrecoverable dystopia.[18] A disaster severe plenty to cause the permanent, irreversible collapse of human civilization would found an existential catastrophe, even if information technology savage short of extinction.[18] Similarly, if humanity fell under a totalitarian government, and in that location were no chance of recovery then such a dystopia would too be an existential ending.[19] Bryan Caplan writes that "peradventure an eternity of totalitarianism would be worse than extinction".[19] (George Orwell's novel Xix Eighty-Four suggests[20] an example.[21]) A dystopian scenario shares the key features of extinction and unrecoverable collapse of civilisation—before the ending, humanity faced a vast range of vivid futures to choose from; later the ending, humanity is locked forever in a terrible land.[18]

Potential sources of risk [edit]

Potential global catastrophic risks include anthropogenic risks, acquired by humans (technology, governance, climate change), and non-anthropogenic or natural risks.[three] Technological risks include the cosmos of destructive bogus intelligence, biotechnology or nanotechnology. Insufficient or malign global governance creates risks in the social and political domain, such as a global war, including nuclear holocaust, bioterrorism using genetically modified organisms, cyberterrorism destroying critical infrastructure like the electrical filigree; or the failure to manage a natural pandemic. Global catastrophic risks in the domain of earth arrangement governance include global warming, environmental degradation, including extinction of species, famine equally a event of non-equitable resource distribution, human overpopulation, ingather failures and non-sustainable agronomics.[ citation needed ]

Examples of not-anthropogenic risks are an asteroid touch event, a supervolcanic eruption, a lethal gamma-ray burst, a geomagnetic storm destroying electronic equipment, natural long-term climate modify, hostile extraterrestrial life, or the predictable Dominicus transforming into a red giant star engulfing the Earth.

Methodological challenges [edit]

Research into the nature and mitigation of global catastrophic risks and existential risks is subject area to a unique set of challenges and, as a result, is not hands subjected to the usual standards of scientific rigour.[18] For instance, information technology is neither feasible nor upstanding to study these risks experimentally. Carl Sagan expressed this with regards to nuclear war: "Understanding the long-term consequences of nuclear war is not a problem amenable to experimental verification".[22] Moreover, many catastrophic risks change rapidly every bit applied science advances and background conditions, such as geopolitical atmospheric condition, change. Another challenge is the general difficulty of accurately predicting the hereafter over long timescales, specially for anthropogenic risks which depend on complex human political, economic and social systems.[18] In improver to known and tangible risks, unforeseeable blackness swan extinction events may occur, presenting an additional methodological trouble.[18] [23]

Lack of historical precedent [edit]

Humanity has never suffered an existential catastrophe and if one were to occur, it would necessarily be unprecedented.[xviii] Therefore, existential risks pose unique challenges to prediction, fifty-fifty more than other long-term events, because of observation pick effects.[24] Unlike with most events, the failure of a complete extinction effect to occur in the by is not bear witness against their likelihood in the hereafter, because every world that has experienced such an extinction event has no observers, and so regardless of their frequency, no civilization observes existential risks in its history.[24] These anthropic issues may partly be avoided past looking at evidence that does not accept such selection furnishings, such equally asteroid impact craters on the Moon, or directly evaluating the likely touch of new technology.[9]

To understand the dynamics of an unprecedented, unrecoverable global civilizational collapse (a blazon of existential adventure), it may be instructive to written report the various local civilizational collapses that have occurred throughout human history.[25] For case, civilizations such as the Roman Empire have concluded in a loss of centralized governance and a major civilization-broad loss of infrastructure and avant-garde technology. Even so, these examples demonstrate that societies appear to be fairly resilient to catastrophe; for case, Medieval Europe survived the Black Death without suffering annihilation resembling a civilization collapse despite losing 25 to 50 pct of its population.[26]

Incentives and coordination [edit]

In that location are economical reasons that can explain why and so trivial effort is going into existential run a risk reduction. It is a global public good, so we should expect information technology to exist undersupplied by markets.[ix] Fifty-fifty if a large nation invests in hazard mitigation measures, that nation will savour only a pocket-size fraction of the benefit of doing and then. Furthermore, existential risk reduction is an intergenerational global public practiced, since nearly of the benefits of existential risk reduction would exist enjoyed past time to come generations, and though these future people would in theory perhaps be willing to pay substantial sums for existential risk reduction, no mechanism for such a transaction exists.[9]

Cognitive biases [edit]

Numerous cognitive biases can influence people's judgment of the importance of existential risks, including scope insensitivity, hyperbolic discounting, availability heuristic, the conjunction fallacy, the affect heuristic, and the overconfidence consequence.[27]

Scope insensitivity influences how bad people consider the extinction of the human race to be. For example, when people are motivated to donate money to altruistic causes, the quantity they are willing to requite does not increment linearly with the magnitude of the effect: people are roughly equally willing to prevent the deaths of 200,000 or 2,000 birds.[28] Similarly, people are oft more concerned about threats to individuals than to larger groups.[27]

Eliezer Yudkowsky theorizes that scope neglect plays a part in public perception of existential risks:[29] [30]

Substantially larger numbers, such as 500 meg deaths, and especially qualitatively different scenarios such as the extinction of the entire human species, seem to trigger a different fashion of thinking... People who would never dream of hurting a child hear of existential hazard, and say, "Well, mayhap the human species doesn't really deserve to survive".

All past predictions of human extinction have proven to be false. To some, this makes future warnings seem less credible. Nick Bostrom argues that the absence of human extinction in the past is weak evidence that there will exist no man extinction in the time to come, due to survivor bias and other anthropic furnishings.[31]

Sociobiologist Eastward. O. Wilson argued that: "The reason for this myopic fog, evolutionary biologists contend, is that it was actually advantageous during all but the concluding few millennia of the ii meg years of existence of the genus Homo... A premium was placed on shut attention to the near future and early reproduction, and little else. Disasters of a magnitude that occur only once every few centuries were forgotten or transmuted into myth."[32]

Proposed mitigation [edit]

Multi-layer defense force [edit]

Defence in depth is a useful framework for categorizing hazard mitigation measures into three layers of defense force:[33]

- Prevention: Reducing the probability of a catastrophe occurring in the first place. Example: Measures to prevent outbreaks of new highly infectious diseases.

- Response: Preventing the scaling of a catastrophe to the global level. Example: Measures to prevent escalation of a small-scale nuclear exchange into an all-out nuclear war.

- Resilience: Increasing humanity's resilience (confronting extinction) when faced with global catastrophes. Example: Measures to increase nutrient security during a nuclear winter.

Human being extinction is most likely when all three defenses are weak, that is, "by risks nosotros are unlikely to preclude, unlikely to successfully respond to, and unlikely to exist resilient against".[33]

The unprecedented nature of existential risks poses a special challenge in designing hazard mitigation measures since humanity volition non be able to larn from a track record of previous events.[18]

Funding [edit]

Some researchers debate that both research and other initiatives relating to existential risk are underfunded. Nick Bostrom states that more enquiry has been done on Star Trek, snowboarding, or dung beetles than on existential risks. Bostrom's comparisons have been criticized as "loftier-handed".[34] [35] Equally of 2020, the Biological Weapons Convention organization had an annual budget of US$1.iv million.[36]

Ecological direction [edit]

Planetary management and respecting planetary boundaries have been proposed as approaches to preventing ecological catastrophes. Within the telescopic of these approaches, the field of geoengineering encompasses the deliberate large-scale engineering and manipulation of the planetary environment to combat or counteract anthropogenic changes in atmospheric chemical science. Some countries have made ecocide a crime.

Space colonization [edit]

Space colonization is a proposed alternative to ameliorate the odds of surviving an extinction scenario.[37] Solutions of this scope may crave megascale engineering.

Astrophysicist Stephen Hawking advocated colonizing other planets within the solar organisation once applied science progresses sufficiently, in club to improve the run a risk of human survival from planet-wide events such as global thermonuclear war.[38] [39]

Billionaire Elon Musk writes that humanity must become a multiplanetary species in lodge to avoid extinction.[40] Musk is using his company SpaceX to develop technology he hopes will exist used in the colonization of Mars.

Survival planning [edit]

Some scholars propose the establishment on Earth of one or more than self-sufficient, remote, permanently occupied settlements specifically created for the purpose of surviving a global disaster.[41] [42] Economist Robin Hanson argues that a refuge permanently housing every bit few as 100 people would significantly meliorate the chances of human survival during a range of global catastrophes.[41] [43]

Food storage has been proposed globally, just the monetary cost would be high. Furthermore, information technology would likely contribute to the current millions of deaths per yr due to malnutrition.[44]

Some survivalists stock survival retreats with multiple-yr food supplies.

The Svalbard Global Seed Vault is cached 400 feet (120 m) inside a mountain on an island in the Arctic. It is designed to hold 2.5 billion seeds from more than than 100 countries as a precaution to preserve the world'south crops. The surrounding rock is −half dozen °C (21 °F) (as of 2015) just the vault is kept at −18 °C (0 °F) past refrigerators powered by locally sourced coal.[45] [46]

More speculatively, if order continues to role and if the biosphere remains habitable, calorie needs for the present human population might in theory be met during an extended absence of sunlight, given sufficient advance planning. Conjectured solutions include growing mushrooms on the dead plant biomass left in the wake of the catastrophe, converting cellulose to sugar, or feeding natural gas to methane-digesting bacteria.[47] [48]

Global catastrophic risks and global governance [edit]

Bereft global governance creates risks in the social and political domain, but the governance mechanisms develop more slowly than technological and social change. There are concerns from governments, the private sector, equally well every bit the general public about the lack of governance mechanisms to efficiently bargain with risks, negotiate and adjudicate betwixt diverse and conflicting interests. This is further underlined past an understanding of the interconnectedness of global systemic risks.[49] In absence or anticipation of global governance, national governments can act individually to better understand, mitigate and prepare for global catastrophes.[50]

Climate emergency plans [edit]

In 2018, the Club of Rome called for greater climatic change action and published its Climate Emergency Plan, which proposes ten action points to limit global boilerplate temperature increase to ane.v degrees Celsius.[51] Further, in 2019, the Club published the more comprehensive Planetary Emergency Programme.[52]

There is bear witness to suggest that collectively engaging with the emotional experiences that emerge during contemplating the vulnerability of the homo species within the context of climatic change allows for these experiences to be adaptive. When collective engaging with and processing emotional experiences is supportive, this can atomic number 82 to growth in resilience, psychological flexibility, tolerance of emotional experiences, and community appointment.[53]

Moving the Earth [edit]

In a few billion years, the Lord's day volition expand into a cherry behemothic, swallowing the Earth. This can be avoided by moving the Earth farther out from the Sun, keeping the temperature roughly constant. That can be accomplished by tweaking the orbits of comets and asteroids so they pass close to the Earth in such a way that they add free energy to the Earth's orbit.[54] Since the Sun's expansion is dull, roughly one such meet every 6,000 years would suffice.[ citation needed ]

Skeptics and opponents [edit]

Psychologist Steven Pinker has chosen existential risk a "useless category" that can distract from real threats such as climatic change and nuclear war.[34]

Organizations [edit]

The Bulletin of the Atomic Scientists (est. 1945) is 1 of the oldest global adventure organizations, founded afterward the public became alarmed by the potential of atomic warfare in the aftermath of WWII. It studies risks associated with nuclear war and energy and famously maintains the Doomsday Clock established in 1947. The Foresight Institute (est. 1986) examines the risks of nanotechnology and its benefits. It was i of the earliest organizations to written report the unintended consequences of otherwise harmless technology gone haywire at a global calibration. Information technology was founded by Chiliad. Eric Drexler who postulated "grey goo".[55] [56]

Starting time afterward 2000, a growing number of scientists, philosophers and tech billionaires created organizations devoted to studying global risks both inside and outside of academia.[57]

Contained non-governmental organizations (NGOs) include the Machine Intelligence Research Institute (est. 2000), which aims to reduce the risk of a ending caused by artificial intelligence,[58] with donors including Peter Thiel and Jed McCaleb.[59] The Nuclear Threat Initiative (est. 2001) seeks to reduce global threats from nuclear, biological and chemical threats, and containment of impairment after an event.[8] Information technology maintains a nuclear material security index.[60] The Lifeboat Foundation (est. 2009) funds inquiry into preventing a technological catastrophe.[61] Most of the research money funds projects at universities.[62] The Global Catastrophic Risk Establish (est. 2011) is a US-based not-profit, non-partisan call up tank founded past Seth Baum and Tony Barrett. GCRI does inquiry and policy work across various risks, including artificial intelligence, nuclear state of war, climate alter, and asteroid impacts.[63] The Global Challenges Foundation (est. 2012), based in Stockholm and founded by Laszlo Szombatfalvy, releases a yearly study on the land of global risks.[64] [65] The Future of Life Establish (est. 2014) works to reduce farthermost, big-scale risks from transformative technologies, too as steer the evolution and use of these technologies to benefit all life, through grantmaking, policy advancement in the U.s., European Union and Un, and educational outreach.[7] Elon Musk, Vitalik Buterin and Jaan Tallinn are some of its biggest donors.[66] The Center on Long-Term Risk (est. 2016), formerly known as the Foundational Inquiry Establish, is a British organization focused on reducing risks of astronomical suffering (due south-risks) from emerging technologies.[67]

University-based organizations include the Future of Humanity Constitute (est. 2005) which researches the questions of humanity's long-term hereafter, especially existential risk.[five] It was founded by Nick Bostrom and is based at Oxford University.[5] The Centre for the Study of Existential Chance (est. 2012) is a Cambridge University-based organization which studies four major technological risks: bogus intelligence, biotechnology, global warming and warfare.[half-dozen] All are human-made risks, as Huw Price explained to the AFP news agency, "It seems a reasonable prediction that some time in this or the next century intelligence volition escape from the constraints of biology". He added that when this happens "nosotros're no longer the smartest things around," and will risk existence at the mercy of "machines that are non malicious, but machines whose interests don't include us."[68] Stephen Hawking was an acting adviser. The Millennium Alliance for Humanity and the Biosphere is a Stanford University-based organization focusing on many problems related to global catastrophe by bringing together members of academia in the humanities.[69] [70] It was founded by Paul Ehrlich, amid others.[71] Stanford University also has the Center for International Security and Cooperation focusing on political cooperation to reduce global catastrophic risk.[72] The Eye for Security and Emerging Applied science was established in January 2019 at Georgetown'south Walsh School of Foreign Service and will focus on policy research of emerging technologies with an initial emphasis on artificial intelligence.[73] They received a grant of 55M USD from Skilful Ventures as suggested by Open Philanthropy.[73]

Other risk assessment groups are based in or are part of governmental organizations. The World Health Organization (WHO) includes a division called the Global Warning and Response (GAR) which monitors and responds to global epidemic crisis.[74] GAR helps member states with preparation and coordination of response to epidemics.[75] The United States Agency for International Development (USAID) has its Emerging Pandemic Threats Program which aims to prevent and contain naturally generated pandemics at their source.[76] The Lawrence Livermore National Laboratory has a division chosen the Global Security Principal Directorate which researches on behalf of the government issues such as bio-security and counter-terrorism.[77]

See likewise [edit]

- Apocalyptic and mail service-apocalyptic fiction

- Artificial intelligence arms race

- Cataclysmic pole shift hypothesis

- Community resilience

- Doomsday cult

- Eschatology

- Extreme risk

- Failed land

- Fermi paradox

- Foresight (psychology)

- Future of Earth

- Hereafter of the Solar Arrangement

- Global Risks Report

- Great Filter

- Holocene extinction

- Impact event

- Listing of global problems

- Nuclear proliferation

- Outside Context Problem

- Planetary boundaries

- Rare events

- The 6th Extinction: An Unnatural History (nonfiction volume)

- Social degeneration

- Societal plummet

- Speculative evolution: Studying hypothetical animals that could one day inhabit Globe later an existential catastrophe.

- Survivalism

- Tail risk

- Timeline of the far time to come

- Ultimate fate of the universe

- Earth Scientists' Alarm to Humanity

Notes [edit]

- ^ Schulte, P.; et al. (March 5, 2010). "The Chicxulub Asteroid Touch and Mass Extinction at the Cretaceous-Paleogene Boundary" (PDF). Science. 327 (5970): 1214–1218. Bibcode:2010Sci...327.1214S. doi:10.1126/science.1177265. PMID 20203042. S2CID 2659741.

- ^ Bostrom, Nick (2008). Global Catastrophic Risks (PDF). Oxford University Press. p. one.

- ^ a b Ripple WJ, Wolf C, Newsome TM, Galetti Chiliad, Alamgir M, Crist E, Mahmoud MI, Laurance WF (November 13, 2017). "World Scientists' Warning to Humanity: A Second Notice". BioScience. 67 (12): 1026–1028. doi:10.1093/biosci/bix125.

- ^ Bostrom, Nick (March 2002). "Existential Risks: Analyzing Human Extinction Scenarios and Related Hazards". Journal of Evolution and Technology. nine.

- ^ a b c "About FHI". Hereafter of Humanity Institute . Retrieved August 12, 2021.

- ^ a b "About u.s.". Centre for the Study of Existential Risk . Retrieved Baronial 12, 2021.

- ^ a b "The Future of Life Constitute". Futurity of Life Institute . Retrieved May 5, 2014.

- ^ a b "Nuclear Threat Initiative". Nuclear Threat Initiative . Retrieved June 5, 2015.

- ^ a b c d e f Bostrom, Nick (2013). "Existential Risk Prevention as Global Priority" (PDF). Global Policy. iv (one): 15–3. doi:10.1111/1758-5899.12002 – via Existential Risk.

- ^ Bostrom, Nick; Cirkovic, Milan (2008). Global Catastrophic Risks. Oxford: Oxford University Printing. p. 1. ISBN978-0-19-857050-9.

- ^ Ziegler, Philip (2012). The Black Expiry. Faber and Faber. p. 397. ISBN9780571287116.

- ^ Muehlhauser, Luke (March 15, 2017). "How big a bargain was the Industrial Revolution?". lukemuelhauser.com . Retrieved August 3, 2020.

- ^ Taubenberger, Jeffery; Morens, David (2006). "1918 Influenza: the Mother of All Pandemics". Emerging Infectious Diseases. 12 (1): 15–22. doi:ten.1257/jep.24.2.163. PMC3291398. PMID 16494711.

- ^ Posner, Richard A. (2006). Catastrophe: Risk and Response. Oxford: Oxford University Press. ISBN978-0195306477. Introduction, "What is Catastrophe?"

- ^ Ord, Toby (2020). The Precipice: Existential Adventure and the Future of Humanity. New York: Hachette. ISBN9780316484916.

This is an equivalent, though crisper statement of Nick Bostrom's definition: "An existential risk is i that threatens the premature extinction of Earth-originating intelligent life or the permanent and drastic destruction of its potential for desirable future development." Source: Bostrom, Nick (2013). "Existential Risk Prevention as Global Priority". Global Policy. 4:15-31.

- ^ Cotton-Barratt, Owen; Ord, Toby (2015), Existential take chances and existential hope: Definitions (PDF), Future of Humanity Establish – Technical Report #2015-one, pp. 1–4

- ^ Bostrom, Nick (2009). "Astronomical Waste product: The opportunity cost of delayed technological development". Utilitas. 15 (3): 308–314. CiteSeerX10.i.i.429.2849. doi:10.1017/s0953820800004076. S2CID 15860897.

- ^ a b c d e f grand h Ord, Toby (2020). The Precipice: Existential Risk and the Hereafter of Humanity. New York: Hachette. ISBN9780316484916.

- ^ a b Bryan Caplan (2008). "The totalitarian threat". Global Catastrophic Risks, eds. Bostrom & Cirkovic (Oxford University Printing): 504-519. ISBN 9780198570509

- ^ Glover, Dennis (June ane, 2017). "Did George Orwell secretly rewrite the end of Nineteen Eighty-Iv every bit he lay dying?". The Sydney Morning Herald . Retrieved November 21, 2021.

Winston's creator, George Orwell, believed that freedom would eventually defeat the truth-twisting totalitarianism portrayed in Nineteen Fourscore-4.

- ^ Orwell, George (1949). Nineteen Eighty-Four. A novel. London: Secker & Warburg.

- ^ Sagan, Carl (Winter 1983). "Nuclear State of war and Climatic Catastrophe: Some Policy Implications". Strange Affairs. Council on Foreign Relations. doi:10.2307/20041818. JSTOR 20041818. Retrieved August 4, 2020.

- ^ Jebari, Karim (2014). "Existential Risks: Exploring a Robust Risk Reduction Strategy" (PDF). Science and Engineering Ethics. 21 (3): 541–54. doi:10.1007/s11948-014-9559-iii. PMID 24891130. S2CID 30387504. Retrieved August 26, 2018.

- ^ a b Cirkovic, Milan M.; Bostrom, Nick; Sandberg, Anders (2010). "Anthropic Shadow: Observation Selection Effects and Human Extinction Risks" (PDF). Risk Assay. 30 (x): 1495–1506. doi:ten.1111/j.1539-6924.2010.01460.10. PMID 20626690. S2CID 6485564.

- ^ Kemp, Luke (February 2019). "Are we on the road to civilization collapse?". BBC . Retrieved August 12, 2021.

- ^ Ord, Toby (2020). The Precipice: Existential Risk and the Future of Humanity. ISBN9780316484893.

Europe survived losing 25 to 50 per centum of its population in the Black Expiry, while keeping civilization firmly intact

- ^ a b Yudkowsky, Eliezer (2008). "Cognitive Biases Potentially Affecting Judgment of Global Risks" (PDF). Global Catastrophic Risks: 91–119. Bibcode:2008gcr..book...86Y.

- ^ Desvousges, Due west.H., Johnson, F.R., Dunford, R.W., Boyle, K.J., Hudson, Due south.P., and Wilson, N. 1993, Measuring natural resource amercement with contingent valuation: tests of validity and reliability. In Hausman, J.A. (ed), Contingent Valuation:A Critical Cess, pp. 91–159 (Amsterdam: North Holland).

- ^ Bostrom 2013.

- ^ Yudkowsky, Eliezer. "Cognitive biases potentially affecting judgment of global risks". Global catastrophic risks 1 (2008): 86. p.114

- ^ "We're Underestimating the Run a risk of Human Extinction". The Atlantic. March vi, 2012. Retrieved July 1, 2016.

- ^ Is Humanity Suicidal? The New York Times Magazine May 30, 1993)

- ^ a b Cotton-Barratt, Owen; Daniel, Max; Sandberg, Anders (2020). "Defence force in Depth Confronting Human Extinction: Prevention, Response, Resilience, and Why They All Affair". Global Policy. 11 (3): 271–282. doi:10.1111/1758-5899.12786. ISSN 1758-5899. PMC7228299. PMID 32427180.

- ^ a b Kupferschmidt, Kai (January eleven, 2018). "Could science destroy the world? These scholars want to save u.s. from a mod-24-hour interval Frankenstein". Scientific discipline. AAAS. Retrieved April twenty, 2020.

- ^ "Oxford Found Forecasts The Possible Doom Of Humanity". Popular Scientific discipline. 2013. Retrieved April twenty, 2020.

- ^ Toby Ord (2020). The precipice: Existential take chances and the hereafter of humanity. ISBN9780316484893.

The international body responsible for the connected prohibition of bioweapons (the Biological Weapons Convention) has an annual budget of $1.4 million - less than the average McDonald's restaurant

- ^ "Flesh must abandon earth or face extinction: Hawking", physorg.com, August nine, 2010, retrieved January 23, 2012

- ^ Malik, Tariq (Apr 13, 2013). "Stephen Hawking: Humanity Must Colonize Space to Survive". Infinite.com . Retrieved July i, 2016.

- ^ Shukman, David (January 19, 2016). "Hawking: Humans at risk of lethal 'ain goal'". BBC News . Retrieved July one, 2016.

- ^ Elon Musk thinks life on earth will go extinct, and is putting almost of his fortune toward colonizing Mars

- ^ a b Matheny, Jason Gaverick (2007). "Reducing the Risk of Human Extinction" (PDF). Risk Analysis. 27 (5): 1335–1344. doi:10.1111/j.1539-6924.2007.00960.x. PMID 18076500. S2CID 14265396.

- ^ Wells, Willard. (2009). Apocalypse when?. Praxis. ISBN978-0387098364.

- ^ Hanson, Robin. "Catastrophe, social collapse, and human extinction". Global catastrophic risks 1 (2008): 357.

- ^ Smil, Vaclav (2003). The Globe's Biosphere: Evolution, Dynamics, and Change. MIT Press. p. 25. ISBN978-0-262-69298-4.

- ^ Lewis Smith (Feb 27, 2008). "Doomsday vault for globe's seeds is opened under Arctic mountain". The Times Online. London. Archived from the original on May 12, 2008.

- ^ Suzanne Goldenberg (May 20, 2015). "The doomsday vault: the seeds that could salve a post-apocalyptic world". The Guardian . Retrieved June xxx, 2017.

- ^ "Here's how the earth could end—and what nosotros can exercise about it". Science. AAAS. July 8, 2016. Retrieved March 23, 2018.

- ^ Denkenberger, David C.; Pearce, Joshua 1000. (September 2015). "Feeding everyone: Solving the nutrient crisis in result of global catastrophes that kill crops or obscure the sun" (PDF). Futures. 72: 57–68. doi:10.1016/j.futures.2014.eleven.008.

- ^ "Global Challenges Foundation | Understanding Global Systemic Take a chance". globalchallenges.org. Archived from the original on August xvi, 2017. Retrieved Baronial xv, 2017.

- ^ "Global Catastrophic Risk Policy". gcrpolicy.com . Retrieved Baronial 11, 2019.

- ^ Lodge of Rome (2018). "The Climate Emergency Programme". Retrieved August 17, 2020.

- ^ Guild of Rome (2019). "The Planetary Emergency Plan". Retrieved August 17, 2020.

- ^ Kieft, J.; Bendell, J (2021). "The responsibility of communicating hard truths virtually climate influenced societal disruption and collapse: an introduction to psychological research". Institute for Leadership and Sustainability (IFLAS) Occasional Papers. 7: 1–39.

- ^ Korycansky, Donald G.; Laughlin, Gregory; Adams, Fred C. (2001). "Astronomical engineering: a strategy for modifying planetary orbits". Astrophysics and Space Science. 275 (4): 349–366. arXiv:astro-ph/0102126. Bibcode:2001Ap&SS.275..349K. doi:x.1023/A:1002790227314. hdl:2027.42/41972. S2CID 5550304.

- ^ Fred Hapgood (Nov 1986). "Nanotechnology: Molecular Machines that Mimic Life" (PDF). Omni. Archived from the original (PDF) on July 27, 2013. Retrieved June 5, 2015.

- ^ Giles, Jim (2004). "Nanotech takes small stride towards burying 'grey goo'". Nature. 429 (6992): 591. Bibcode:2004Natur.429..591G. doi:10.1038/429591b. PMID 15190320.

- ^ Sophie McBain (September 25, 2014). "Apocalypse soon: the scientists preparing for the end times". New Statesman . Retrieved June 5, 2015.

- ^ "Reducing Long-Term Catastrophic Risks from Artificial Intelligence". Machine Intelligence Research Institute. Retrieved June 5, 2015.

The Machine Intelligence Enquiry Institute aims to reduce the chance of a catastrophe, should such an upshot eventually occur.

- ^ Angela Chen (September eleven, 2014). "Is Artificial Intelligence a Threat?". The Chronicle of Higher Teaching . Retrieved June five, 2015.

- ^ Alexander Sehmar (May 31, 2015). "Isis could obtain nuclear weapon from Pakistan, warns India". The Independent. Archived from the original on June 2, 2015. Retrieved June 5, 2015.

- ^ "Almost the Lifeboat Foundation". The Lifeboat Foundation. Retrieved April 26, 2013.

- ^ Ashlee Vance (July 20, 2010). "The Lifeboat Foundation: Battling Asteroids, Nanobots and A.I." New York Times . Retrieved June 5, 2015.

- ^ "Global Catastrophic Take chances Institute". gcrinstitute.org . Retrieved March 22, 2022.

- ^ Meyer, Robinson (Apr 29, 2016). "Homo Extinction Isn't That Unlikely". The Atlantic. Boston, Massachusetts: Emerson Collective. Retrieved April xxx, 2016.

- ^ "Global Challenges Foundation website". globalchallenges.org . Retrieved April 30, 2016.

- ^ Nick Bilton (May 28, 2015). "Ava of 'Ex Machina' Is Simply Sci-Fi (for Now)". New York Times . Retrieved June 5, 2015.

- ^ "Virtually Us". Eye on Long-Term {{subst:lc:Risk}}. Retrieved May 17, 2020.

We currently focus on efforts to reduce the worst risks of astronomical suffering (south-risks) from emerging technologies, with a focus on transformative artificial intelligence.

- ^ Hui, Sylvia (November 25, 2012). "Cambridge to study engineering science's risks to humans". Associated Printing. Archived from the original on December i, 2012. Retrieved Jan thirty, 2012.

- ^ Scott Barrett (2014). Environment and Evolution Economics: Essays in Honour of Sir Partha Dasgupta. Oxford University Press. p. 112. ISBN9780199677856 . Retrieved June 5, 2015.

- ^ "Millennium Alliance for Humanity & The Biosphere". Millennium Brotherhood for Humanity & The Biosphere . Retrieved June 5, 2015.

- ^ Guruprasad Madhavan (2012). Practicing Sustainability. Springer Science & Business Media. p. 43. ISBN9781461443483 . Retrieved June v, 2015.

- ^ "Center for International Security and Cooperation". Eye for International Security and Cooperation. Retrieved June 5, 2015.

- ^ a b Anderson, Nick (Feb 28, 2019). "Georgetown launches call back tank on security and emerging applied science". Washington Post . Retrieved March 12, 2019.

- ^ "Global Alarm and Response (GAR)". World Health System. Archived from the original on Feb 16, 2003. Retrieved June five, 2015.

- ^ Kelley Lee (2013). Historical Dictionary of the World Health Organization. Rowman & Littlefield. p. 92. ISBN9780810878587 . Retrieved June 5, 2015.

- ^ "USAID Emerging Pandemic Threats Plan". USAID. Archived from the original on Oct 22, 2014. Retrieved June 5, 2015.

- ^ "Global Security". Lawrence Livermore National Laboratory. Retrieved June 5, 2015.

Farther reading [edit]

- Avin, Shahar; Wintle, Bonnie C.; Weitzdörfer, Julius; ó Héigeartaigh, Seán S.; Sutherland, William J.; Rees, Martin J. (2018). "Classifying global catastrophic risks". Futures. 102: 20–26. doi:x.1016/j.futures.2018.02.001.

- Corey S. Powell (2000). "20 ways the world could cease suddenly", Notice Magazine

- Derrick Jensen (2006) Endgame (ISBN i-58322-730-Ten).

- Donella Meadows (1972). The Limits to Growth (ISBN 0-87663-165-0).

- Edward O. Wilson (2003). The Futurity of Life. ISBN 0-679-76811-4

- Holt, Jim, "The Power of Catastrophic Thinking" (review of Toby Ord, The Precipice: Existential Risk and the Future of Humanity, Hachette, 2020, 468 pp.), The New York Review of Books, vol. LXVIII, no. 3 (February 25, 2021), pp. 26–29. Jim Holt writes (p. 28): "Whether you are searching for a cure for cancer, or pursuing a scholarly or artistic career, or engaged in establishing more just institutions, a threat to the future of humanity is too a threat to the significance of what you do."

- Huesemann, Michael H., and Joyce A. Huesemann (2011). Technofix: Why Technology Won't Salvage Us or the Environment, Chapter 6, "Sustainability or Plummet", New Guild Publishers, Gabriola Island, British Columbia, Canada, 464 pages (ISBN 0865717044).

- Jared Diamond, Collapse: How Societies Cull to Fail or Succeed, Penguin Books, 2005 and 2011 (ISBN 9780241958681).

- Jean-Francois Rischard (2003). Loftier Apex twenty Global Problems, 20 Years to Solve Them. ISBN 0-465-07010-8

- Joel Garreau, Radical Development, 2005 (ISBN 978-0385509657).

- John A. Leslie (1996). The End of the World (ISBN 0-415-14043-9).

- Joseph Tainter, (1990). The Collapse of Complex Societies, Cambridge University Press, Cambridge, UK (ISBN 9780521386739).

- Martin Rees (2004). Our Final Hour: A Scientist'due south alert: How Terror, Fault, and Ecology Disaster Threaten Humankind's Future in This Century—On Earth and Beyond. ISBN 0-465-06863-four

- Roger-Maurice Bonnet and Lodewijk Woltjer, Surviving 1,000 Centuries Tin can We Do Information technology? (2008), Springer-Praxis Books.

- Toby Ord (2020). The Precipice - Existential Risk and the Future of Humanity. Bloomsbury Publishing. ISBN 9781526600219

External links [edit]

- "Are we on the route to civilisation collapse?". BBC. Feb nineteen, 2019.

- "Meridian 10 Ways to Destroy Earth". livescience.com. LiveScience. Archived from the original on January ane, 2011.

- "What a way to go" from The Guardian. 10 scientists name the biggest dangers to Earth and appraise the chances they will happen. April 14, 2005.

- Annual Reports on Global Take chances by the Global Challenges Foundation

- Middle on Long-Term Risk

- Global Catastrophic Risk Policy

- Humanity nether threat from perfect tempest of crises – study. The Guardian. February half-dozen, 2020.

- Stephen Petranek: 10 ways the world could end, a TED talk

Source: https://en.wikipedia.org/wiki/Global_catastrophic_risk

0 Response to "Art Existential Risk the Anthropic Principle Human Enhancement Ethics Superintelligence Risks"

Post a Comment